Spatial-MemER

Spatial memory for hierarchical VLA policies.

MemER's keyframes capture what the robot saw — but not where. We add egocentric spatial context by computing camera poses via DPVO and forward kinematics, rendered as a bird's-eye map the high-level policy (VLM) can directly perceive.

Extending MemER: Scaling Up Memory for Robot Control via Experience Retrieval

We didn't have a robot... so we used a chest-mounted iPhone 16 Pro running DPVO for localization, constrained Mark's arm to a single DOF with 45° discretized joint angles, and computed FK manually from video. Keyframes and subtasks are simulated based on what we expect MemER would output.

Why Spatial-MemER?

Humans don't just remember what they saw — they remember where they saw it. Think about making an omelette: you recall the pan, but also that it's in the cabinet left of the stove. Spatial context is inseparable from visual memory.

MemER partially captures this through its exocentric camera, which observes the robot arm from a third-person view. But this approach has limits:

- 1.VLMs struggle with 3D spatial reasoning, particularly in multi-view contexts where reasoning complexity increases and models become more prone to hallucinations (Yu et al., 2025). This extends to occlusions and viewpoint-dependent relations like "behind" or "in front of."

- 2.It breaks down entirely when the robot can move and explore: the exocentric frame no longer anchors anything.

We wanted to give the policy explicit spatial context through an egocentric map, a direct visual representation of where keyframes were captured relative to the robot's current pose. More on the mechanism below.

This is a starting point. Explicit maps are interpretable and easy to integrate, but we're ultimately interested in whether spatial context could be encoded implicitly, analogous to how positional embeddings like RoPE encode token position in text. Could we learn spatial encodings over visual tokens?

Architecture

Spatial-MemER is built to sit on top of the existing MemER architecture. In fact, you can integrate our approach into MemER with just a few lines of code, which runs at 1Hz within the high-level policy. We designed the map module to be customizable through a MapConfig dataclass, making it easy to experiment with different map layouts to see what the VLM responds to best.

At a high level, Spatial-MemER does two things: (1) store the end-effector pose at each frame, and (2) generate an egocentric BEV map which is passed as an additional image to the high-level policy. More on our localization approach below.

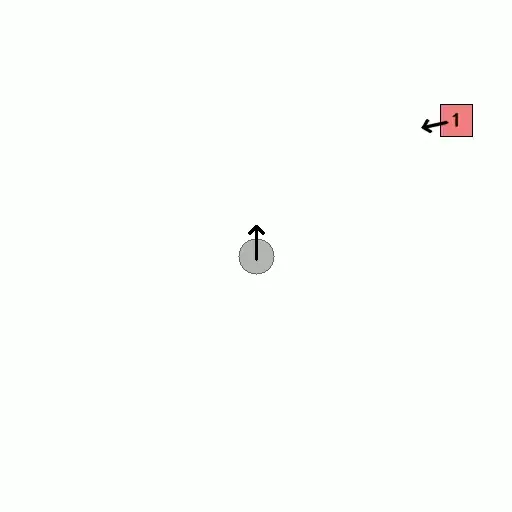

The egocentric map displays keyframes at their position relative to the robot, with arrows indicating orientation. Map scale is computed relative to the farthest keyframe from the robot, normalizing positions so the map uses its full extent regardless of how spread out keyframes are. To prevent nearby keyframes from clustering, we run outlier detection on keyframe distances: anything beyond 2σ is clamped to the edge of the map rather than distorting the scale. Each keyframe image is watermarked with a colored and numbered square that matches its marker on the map, using colors opposite on the color wheel to help the VLM distinguish between them. We also developed overlap prevention for map markers, though this is not shown in the demo.

Spatial map from kitchen demo. The robot is always centered, facing up. Numbered markers show where keyframes were captured relative to the robot's current position.

MemER's clustering algorithm can promote non-current frames to keyframe status, but we only have access to the current robot state, which we need to compute the camera pose at that frame. To handle this, we maintain a two-part system: all frames are added to a pose history as they arrive, and frames can later be promoted to keyframes when the clustering algorithm selects them.

The entire interface is accessible through the SpatialContext class. Here's an example for a stationary robot setup:

# Initialize

ctx = SpatialContext()

# In the high-level policy loop (1 Hz)

robot_state = robot.get_state() # 7-DOF joint angles

# 1. Add current frame to pose history

frame_id = ctx.add_frame(robot_state)

# 2. Generate the egocentric spatial map

map_image, colors = ctx.generate_map()

# 3. Watermark keyframe images with map markers

watermarked_keyframes = ctx.watermark_keyframes(keyframe_images, colors)

# 4. Promote important frames to keyframes (when selected by MemER)

ctx.promote_to_keyframe(frame_id)

# Feed map_image + watermarked_keyframes to the VLM and repeat!Localization

To obtain the camera pose, Spatial-MemER can run in two modes: stationary, where the robot base is fixed and the end-effector pose can be computed through forward kinematics, and mobile, where the robot moves through an environment and we need visual odometry to track its position. In both cases, the map is always represented in end-effector coordinates in the world frame.

For Stationary Robots

Robots clamped to a table with precise actuators:

Joint Angles → Forward Kinematics → Camera Pose → Spatial Map

(7-DOF) (SE(3) 4×4) (World) (Egocentric BEV)We use the Franka Emika Panda (FR3) arm described in the MemER paper and compute the end-effector pose through the MuJoCo physics library given the robot's joint state. With a stationary base and precise actuators, forward kinematics provides exact pose: no SLAM needed. The robot state is already available in MemER's observation loop, making integration truly plug and play.

# Stationary robot localization

robot_state = robot.get_state() # 7-DOF joint angles

camera_pose = forward_kinematics(robot_state) # SE(3) via MuJoCo

ctx.add_frame(camera_pose)For Mobile Robots

Robots with moving bases:

RGB Frames → DPVO (Deep Patch Visual Odometry) → Robot Pose (World) + FK → Spatial MapWe use Deep Patch Visual Odometry (DPVO) to track the robot's base pose. Our approach runs DPVO in parallel at 15Hz on the exocentric camera, which has a better view of the environment. Running at higher frequency means more images and more stable pose estimates. Whenever we need a pose, it's already available from the parallel thread.

DPVO eliminates the overhead of approaches that also predict a point cloud of the environment, focusing purely on visual odometry. The result is an algorithm that runs 1.5-8.9x faster than DROID-SLAM and comfortably hits 15Hz (Teed et al., 2023).

# Mobile robot localization

dpvo_pose = dpvo.get_latest_pose() # Exocentric camera pose from visual odometry

robot_state = robot.get_state() # Joint angles

base_pose = dpvo_pose @ T_exo_to_base # Transform from exocentric camera to arm base

camera_pose = base_pose @ forward_kinematics(robot_state) # Compose base + arm

ctx.add_frame(camera_pose)Improvements to DPVO

For our demo, we used DPVO out of the box with iPhone 16 Pro camera intrinsics we tuned for the 0.5x lens we recorded with. It worked well, but we see two improvements for tighter integration:

- 1.DPVO randomly samples image patches and tracks them across frames using learned correlation features. We found it struggled with the robot arm (or in our demo, Mark's arm) always in view, as patches sampled from the arm produce inconsistent motion estimates. Given the robot state, you could generate a projected mask of the arm onto the exocentric camera and prevent DPVO from sampling patches in that region.

- 2.DPVO initializes patch depth as the median depth of patches from the previous three frames, then refines it through differentiable bundle adjustment. But MemER uses RGB-D cameras, and our iPhone 16 Pro has depth from its LiDAR sensor. Replacing the initialized depth values with ground truth from the RGB-D feed should improve convergence and accuracy.

Next Steps

Data collection without a robot. We didn't have access to a robot arm, so we improvised. Mark wore a chest-mounted iPhone 16 Pro and a wrist-mounted GoPro, constrained his arm to a single degree of freedom, and performed kitchen tasks while we recorded. To compute FK, we reviewed the footage and manually logged the shoulder angle at each frame—discretizing to 45° increments made this easy to eyeball. DPVO ran on the chest camera for localization. It's scrappy, but it validated the full pipeline: localization, pose storage, map generation, and keyframe correspondence all work end-to-end.

Finetuning for spatial dependence. The risk with adding spatial context is that the VLM ignores it. If the exocentric camera in MemER already provides enough information to complete the task, the high-level policy has no incentive to attend to the map. To force spatial dependence, we plan to finetune the high-level policy (Qwen2.5-VL-7B-Instruct) on a mix of tasks: some where the map is necessary, some where it isn't. This prevents the model from losing its ability to reason from frames alone. Tasks requiring the map include objects not visible in any current frame, disambiguation between identical objects, and navigation-first scenarios. The training signal should teach the model when to use spatial context, not just that it exists.

What we want to test:

- 1.Does spatial context hurt when unnecessary? We need ablations on standard MemER tasks to ensure the map doesn't degrade performance when spatial reasoning isn't required.

- 2.Does it help when it should? We want to evaluate on tasks designed to require spatial reasoning: mobile robots navigating between rooms, cluttered stationary environments, and return-to-location tasks where the robot must revisit a previously seen object.

- 3.Benchmarking spatial capacity. There's no standard benchmark for spatial reasoning in VLA/hierarchical policies. The closest work is SIBench (Yu et al., 2025), which evaluates spatial reasoning in VLMs across tasks like distance estimation, spatial relations, and multi-view reasoning. But robotics introduces new challenges: the agent is embodied, the spatial context evolves over time, and reasoning must translate into action. We'd like to develop a benchmark tailored to VLAs that measures: (a) recall, can the model identify where a keyframe was captured, (b) relational reasoning, can it answer questions like "which keyframe is closest to the robot," (c) planning, can it use spatial context to choose efficient action sequences, and (d) cost, does passing an explicit map consume the VLM's context window in ways that hurt downstream task performance?

- 4.Environment preloading. An exciting direction is loading spatial priors into a policy before deployment. Instead of learning an environment through exploration, you bootstrap with a map, like giving a new employee a tour before their first shift. This could dramatically reduce the data needed for new environments.

- 5.Implicit spatial representations. We know explicit maps aren't the most elegant way to represent space, but they prove the concept. Ultimately, we want to explore different ways to imbue policies with spatial awareness. Could spatial context be encoded directly into visual token embeddings, analogous to how RoPE encodes token position in text? The map is a starting point; implicit representations are where this research goes next.

Next Steps. The MemER codebase isn't publicly available yet, and we don't have access to robot hardware, which limits how far we can push these ideas experimentally. We see Spatial-MemER as an initial exploration rather than a complete solution, and we're very open to collaborating with others interested in extending, evaluating, or stress-testing these ideas in more realistic robotic settings.